HI-BAR (Had I Been A Reviewer)

A post-publication review of Anguera et al (2013). Video game training enhances cognitive control in older adults. Nature, 501, 97-101.

For more information about HI-BAR reviews, see my post from earlier today.

In a paper published this week in Nature, Anguera et al reported a study in which older adults were trained on a driving video game for 12 hours. Approximately 1/3 of the participants engaged in multitasking training (both driving and detecting signs), another 1/3 did the driving or sign tasks separately without having to do both at once, and the final 1/3 was a no-contact control. The key findings in the paper:

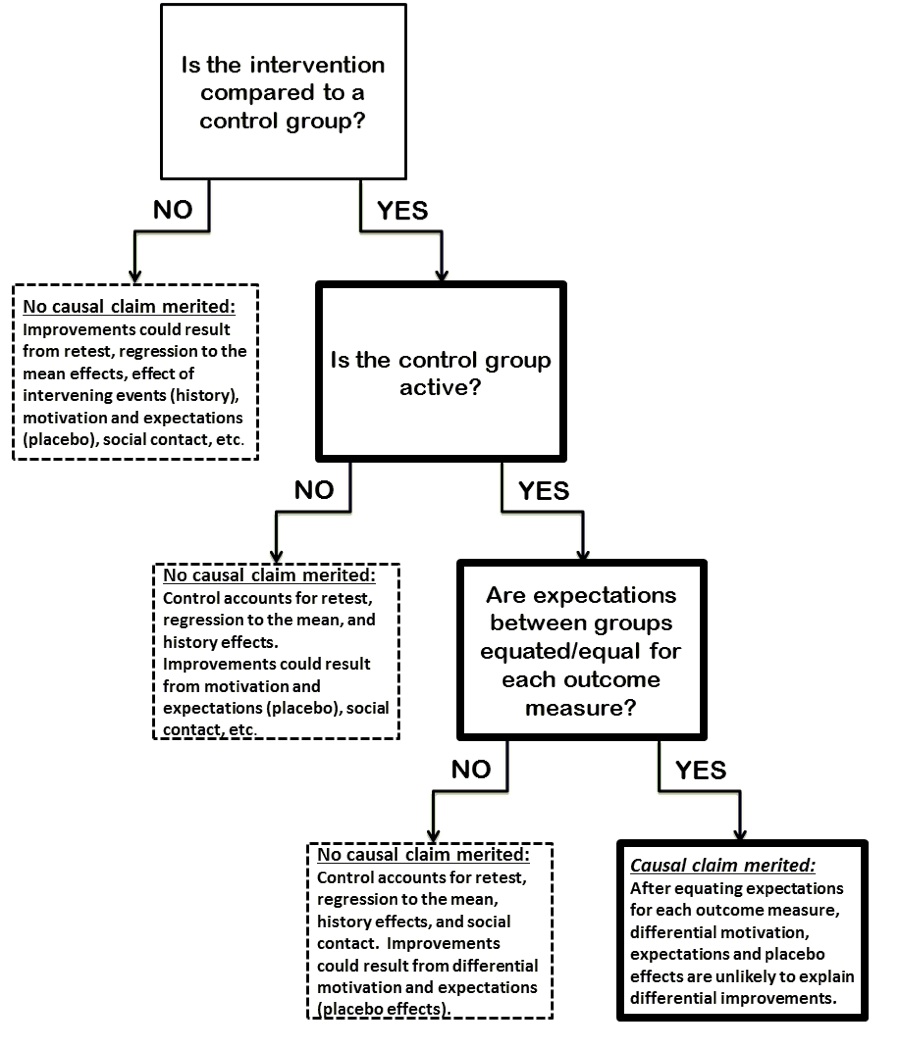

- After multitasking training, the seniors attained "levels beyond those achieved by untrained 20-year-old participants, with gains persisting for 6 months"

- Multitasking training "resulted in performance benefits that extended to untrained cognitive control abilities"

- Neural measures of midline-frontal theta power and frontal-parietal theta coherence correlated with these improvements

This is one of many recent papers touting the power of video games to improve cognition, published in a top journal, that receives glowing (almost breathless) media coverage: The NY Times reports "A Multitasking Video Game Makes Old Brains Act Younger." A story in Nature in nature claims "Gaming Improves Multitasking Skills." The Atlantic titles their story, "How to Rebuild an Attention Span." (Here's one exception that notes a few limitations).

In several media quotes, the senior author on the paper (Gazzaley) admirably cautions against over-hyping of these findings (e.g., "Video games shouldn’t now be seen as a guaranteed panacea" in the Nature story). Yet overhyping is exactly what we have in the media coverage (and a bit in the paper as well).

The research is not bad. It's a reasonable, publishable first study that requires a bit more qualification and more limited conclusions: Some of the strongest claims are not justified, the methods and findings have limitations, and none of those are shortcomings are acknowledged or addressed. If you are a regular reader of this blog, you're familiar with the problems that plague most such studies. Unfortunately, it appears that the reviewers, editors, and authors did not address them.

In the spirit of Rolf Zwaan's recent "50 questions" post (although this paper is far stronger than the one he critiqued), here are 19 comments/questions about the paper and supplementary materials (in a somewhat arbitrary order). I hope that the authors can answer many of these questions by providing more information. Some might be harder to address. I would be happy to post their response here if they would like.

19 Questions and Comments

1. The sample size is small given the scope of the claims, averaging about 15 per group. That's worrisome -- it's too small a sample to be confident that random assignment compensates for important unknown differences between the groups.

2. The no-contact control group is of limited value. All it tells us is whether the training group improved more than would be expected from just re-taking the same tests. It's not an adequate control group to draw any conclusions about the effectiveness of training. It does nothing to control for motivation, placebo effects, differences in social contact, differences in computer experience, etc. Critically, the relative improvements due to multitasking training reported in the paper are consistently weaker (and fewer are statistically significant) when the comparison is to the active "single task" control group. According to Supplementary Table 2, out of the 11 reported outcome measures, the multitasking group improved more than the no-contact group on 5 of those measures, and they improved more than the single-task control on only 3.

3. The dual-task element of multitasking is the mechanism that purportedly underlies transfer to the other cognitive tasks, and neither the active nor the no-contact control included that interference component. If neither control group had the active ingredient, why were the effects consistently weaker when the multitasking group was compared to the single task group than when compared to the control group? That suggests the possibility of a differential placebo effect: Regardless of whether or not the condition included the active ingredient, participants might improve because they expected to improve.

4. The active control group is relatively good (compared to those often used in cognitive interventions) - it uses many of the same elements as the multitasking group and is fairly closely matched. But, the study included no checks for differential expectations between the two training groups. If participants expected greater improvements on some outcome measures from multitasking training than from single-task training, then some or all of the benefits for various outcome measures might have been due to expectations rather than to any benefits of dual-task training. For details, see our paper in Perspectives that discusses this pervasive problem with active control groups. If you want the shorter version, see my blog post about it. Just because a control group is active does not mean that it accounts for differential expectations across conditions.

5. The paper reports that improvements in the training task were maintained for 6 months. That's welcome information, but not particularly surprising (see #13 below). The critical question is whether the transfer effects were long-lasting. Were they? The paper doesn't say. If they weren't, then all we know is that subjects retained the skills they had practiced, and we know nothing about the long-term consequences of that learning for other cognitive skills.

6. According to Figure 9 in the supplement, 23% of the participants who enrolled in the study were dropped from the study/analyses (60 enrolled, 46 completed the study). Did drop out or exclusion differentially affect one group? If participants were dropped based on their performance, how were the cutoffs determined? Did the number of subjects excluded for each reason vary across groups? Are the results robust to different cut-offs? What are the implications for the broad use of this type of training if nearly a quarter of elderly adults cannot do the tasks adequately?

7. Supplemental Table 2 reports 3 significant outcome measures out of 11 tasks/measures (when comparing to the active control group). Many of those tasks include multiple measures and could have been analyzed differently. Consider also that each measure could be compared to either control group and that it also would have been noteworthy if the single task group had outperformed the no-contact group. That means there were a really large number of possible statistical tests that, if significant, could have been interpreted as supporting transfer of training. I see no evidence of correction for multiple tests. Only a handful of these many tests were significant, and most were just barely so (interaction of session x group was p=.08, p=.03, and p=.02). For the crucial comparison of the multitasking group to each control group, the table only reports a "yes" or "no" for statistically significant at .05, and they must be close to that boundary. (There also are oddities in the table, like a reported significant effect with d=.67, but a non-significant one with d=.68 for the same group comparison.) With correction for multiple comparisons, I'm guessing that none of these effects would reach statistical significance. A confirmatory replication with a larger sample would be needed to show that the few significant results (with small sample sizes) were not just false positives.

8. The pattern of outcome measures is somewhat haphazard and inconsistent with the hypothesis that dual-task interference is the reason for differential improvements. For example, if the key ingredient in dual-task training is interference, why didn't multitasking training lead to differential improvement on the dual-task outcome measure? That lack of a critical finding is largely ignored. Similarly, why was there a benefit for the working memory task that didn't involve distraction/interference? Why wasn't there a difference in the visual short term memory task both with and without distraction? Why was there a benefit for the working memory task without distraction (basically a visual memory task) but not the visual memory task? The pattern of improvements seems inconsistent with the proposed mechanism for improvement.

9. The study found that practice on a multitasking video game improves performance on that game to level of a college student. Does that mean that the game improved multitasking abilities to the level of a 20 year old? No, although you'd never know that from the media coverage. The actual finding is that after 12 hours of practice on a game, seniors play as well as a 20 year old who is playing the game for the first time. The finding does not show that multitasking training improved multitasking more broadly. In fact, it did not even transfer to a different dual task. Did they improve to the level of 20 year olds on any of the transfer tasks? That seems unlikely, but if they did, that would be bigger news.

10. The paper reports only difference scores and does not report any means or standard deviations. This information is essential to help the reader decide whether improvements were contaminated by regression to the mean or influenced by outliers. Perhaps the authors could upload the full data set to openscienceframework.org or another online repository to make those numbers available?

11. Why are there such large performance decreases in the no-contact group (Figures 3a and 3b of the main paper)? This is a slowing of 100ms, a pretty massive decline for just one month of aging. Most of the other data are presented as z-scores, so it's impossible to tell whether the reported interactions are driven by a performance decrease in one or both of the control groups rather than an improvement in the multitasking group. That's another reason why it's essential to report the performance means and standard deviations for both the pre-test and the post-test.

12. It seems a bit generous to claim (p.99) that, in addition to the significant differences on some outcome measures, there were trends for better performance in other tasks like the UFOV. Supplementary Figure 15 shows no difference in UFOV improvements between the multitasking group and the no-contact control. Moreover, because these figures show Z-scores, it's impossible to tell whether the single-task group is improving less or even showing worse performance. Again, we need the means for pre- and post-testing to evaluate the relative improvements.

13. Two of the core findings of this paper, that multitasking training can improve the performance of elderly subjects to the levels shown by younger subjects and that those improvements last for months, are not novel. In fact, they were demonstrated nearly 15 years ago in a paper that wasn't cited. Kramer et al (1999) found that giving older adults dual-task training led to substantial improvements on the task, reaching the levels of young adults after a small amount of training. Moreover, the benefits of that training lasted for two months. Here are the relevant bits from the Kramer et al abstract:

Young and old adults were presented with rows of digits and were required to indicate whether the number of digits (element number task) or the value of the digits (digit value task) were greater than or less than five. Switch costs were assessed by subtracting the reaction times obtained on non-switch trials from trials following a task switch.... First, large age-related differences in switch costs were found early in practice. Second, and most surprising, after relatively modest amounts of practice old and young adults switch costs were equivalent. Older adults showed large practice effects on switch trials. Third, age-equivalent switch costs were maintained across a two month retention period.

14. While we're on the subject of novelty, the authors state in their abstract: "These findings ... provide the first evidence, to our knowledge, of how a custom-designed video game can be used to assess cognitive abilities across the lifespan, evaluate underlying neural mechanisms, and serve as a powerful tool for cognitive enhancement." Unfortunately, they seem not to have consulted the extensive literature on the effects of training with the custom-made game Space Fortress. That game was designed by cognitive psychologists and neuroscientists in the 1980s to study different forms of training and to measure cognitive performance. It includes components designed to train and test memory, attention, motor control, etc. It has been used with young and old participants, and it has been studied using ERP, fMRI, and other measures. The task has been used to study cognitive training and transfer of training both to laboratory tasks and to real world performance. It has also been used to study different forms of training, some of which involve explicit multitasking and others that involve separating different task components. There are dozens of papers (perhaps more than 100) using that game to study cognitive abilities, training, and aging. Those earlier studies suffered from many of the same problems that most training interventions do, but they do address the same issues studied in this paper. The new game looks much better than Space Fortress, and undoubtably is more fun to play, but it's not novel in the way the authors claim.

15. Were the experimenters who conducted the cognitive testing blind to the condition assignment? That wasn't stated, and if they were not, then experimenter demands could contribute to differential improvements during the post-test.

16. Could the differences between conditions be driven by differences in social contact and computer experience? The extended methods state, "if requested by the participant, a laboratory member would visit the participant in their home to help set up the computer and instruct training." How often was such assistance requested? Did the rates differ across groups? Later, the paper states, "All participants were contacted through email and/or phone calls on a weekly basis to encourage and discuss their training; similarly, in the event of any questions regarding the training procedures, participants were able to contact the research staff through phone and email." Presumably, the authors did not really mean "all participants." What reason would the no-contact group have to contact the experimenters, and why would the experimenters check in on their training progress? As noted earlier, differences like this are one reason why no-contact controls are entirely inadequate for exploring the necessary ingredients of a training improvement.

17. Most of the assessment tasks were computer based. Was there any control for prior computer experience or the amount of additional assistance each group needed? If not, the difference between these small samples might partly be driven by baseline differences in computer skills that were not equally distributed across conditions. The training tasks might also have trained the computer skills of the older participants or increased their comfort with computers. If so, improved computing skills might account for any differences in improvement between the training conditions and the no-contact control.

18. The paper states, "Given that there were no clear differences in sustained attention or working memory demands between MTT and STT, transfer of benefits to these untrained tasks must have resulted from challenges to overlapping cognitive control processes." Why are there no differences? Presumably maintaining both tasks in mind simultaneously in the multitasking condition places some demand on working memory. And, the need to devote attention to both tasks might place a greater demand on attention as well. Perhaps the differences aren't clear, but it seems like an unverified assumption that they tap these processes equally.

19. The paper reports a significant relationship

between brain measures and TOVA improvement (p = .04). The “statistical analyses”

section reports that one participant was excluded for not showing the expected pattern

of results after training (increased midline frontal theta power). Is this a common practice? What is the p

value of the correlation when this excluded participant is included? Why aren’t correlations reported for the relationship between the transfer tasks and training performance or brain changes for the single-task control group? If the same relationships exist for that group, then that undermines the claim that multitask training is doing something special. The authors report that these relationships aren't significant, but the ones for the multitasking group are not highly significant either, and the presence of a significant relationship in one case and not in the other does not mean that the effects are reliably different for the two conditions.

Conclusions

Is this a worthwhile study? Sure. Is it fundamentally flawed? Not really. Does it merit extensive media coverage due to it's importance and novelty? Probably not. Should seniors rush out to buy brain training games to overcome their real-world cognitive declines? Not if their decision is based on this study. Should we trust claims that such games might have therapeutic benefits? Not yet.

Even if we accept all of the findings of this paper as correct and replicable, nothing in this study shows that the game training will improve an older person's ability to function outside of the laboratory. Claims of meaningful benefits, either explicit or implied, should be withheld until demonstrations show improvements on real or simulated real-world tasks as well.

This is a good first study of the topic, and it provides a new and potentially useful way to measure and train multitasking, but it doesn't merit quite the exuberance displayed in media coverage of it. If I were to rewrite the abstract to reflect what the study actually showed, it might sound something like this:

In three studies, we validated a new measure of multitasking, an engaging video game, by replicating prior evidence that multitasking declines linearly with age. Consistent with earlier evidence, we find that 12 hours of practice with multitasking leads to substantial performance gains for older participants, bringing their performance to levels comparable to those of 20-year-old subjects performing the task for the first time. And, they remained better at the task even after 6 months. The multitasking improvements were accompanied by changes to theta activity in EEG measures. Furthermore, an exploratory analysis showed that multitasking training led to greater improvements than did an active control condition for a subset of the tasks in a cognitive battery. However, the pattern of improvements on these transfer tasks was not entirely consistent with what we might expect from multitasking training, and the active control condition did not necessarily induce the same expectations for improvement. Future confirmatory research with a larger sample size and checks for differential expectations is needed to confirm that training enhances performance on other tasks before strong claims about the benefits of training are merited. The video game multitasking training we developed may prove to be a more enjoyable way to measure and train multitasking in the elderly.